Destructive Destruction? An Ecological Study of High Frequency Trading

Buy on Amazon UK £9.99 and other regions, Super Saving free shipping.

As the financial crisis fastens its grip ever tighter around the means of human and natural survival, the age of the algorithm has hit full stride. This phase-shift has been a long time coming of course, and was undoubtedly as much a cause of the crisis as its effect, with self-propelling algorithmic power replacing human labour and judgement and creating event fields far below the threshold of human perception and responsiveness.

How is High Frequency Trading’s drive to efficiency affecting market dynamics as a whole? In their analysis of the financial arms race, Inigo Wilkins and Bogdan Dragos find that far from beating entropy, algorithmic trading simply redistributes it more unequally than ever

What follows is an account of the concepts of information and noise as they apply to an analysis of high frequency trading according to ‘heterodox economics’. This account will highlight the evolutionary path that has led to the present micro-structure of financial markets and allow for a diagnosis of the contemporary financial ecology. High Frequency Trading (HFT) is a subset of algorithmic trading which works at very low time horizons (100 milliseconds) and requires massive information processing capacities. Following recent developments such as flash crashes and various technical break-downs, it is crucial to unpack the black-box of algorithmic high-frequency trading in order to understand its potential impact on wider social and economic systems. This will entail the application of various scientific theories to the financial domain that extend classical models of reversible functions, and go beyond models based on efficiency and equilibrium, encompassing a much wider class of irreversible transformations.

According to heterodox economics the development of thermodynamics brought an end to the dominance of classical physics in economic theory, in particular the dogma of efficient markets hypothesis, and reversal to equilibrium. It is this significant theoretical upheaval that allows Nicholas Georgescu-Roegen to say that the law of entropy is the basis of the economy of life at all levels.i Writing in the turbulent ’70’s, amidst the oil crisis, it became apparent to him that classical economic theory could no longer be an adequate model in addressing the huge task of third world underdevelopment, depletion of natural resources, increasing pollution, overpopulation, etc. In his attempt to deal with these issues, he recognised that the main barrier in repositioning economic theory on new grounds was its reliance on Newtonian mechanics. As the Midnight Notes Collective argue, Enlightenment thought was concomitant with a drive to the extraction of absolute surplus value during the first wave of real subsumption in the industrial revolution.ii Georgescu-Roegen advocated the replacement of this idealised paradigm with thermodynamics whose second law (that the entropy of an isolated system tends to a maximum) would offer a much more fruitful theoretical foundation for economics. It should be noted at this point that although economic theory is even now still dominated by reversible models based on the supposition of efficiency and equilibrium at the abstract level, in practical terms thermodynamics had already significantly altered the political economy through the 19th century preoccupation with exhaustion, leading to a 'science of work' that concretised in the Taylorisation of the work-force after WW1.

The true novelty of Georgescu-Roegen's formulation lies in his proposal for a fourth law of thermodynamics, where it is not only energy that is subject to decreasing returns, but also matter; 'friction robs us of available matter'.iii He thus identifies an ultimate supply limit of low entropy matter-energy; a 'source of absolute scarcity' consisting of a terrestrial stock and a solar flow.iv This should not be understood as a reductionist account capable of explaining the causal structure of everything, but rather the identification of an abstract functional schematic whose explanatory coherence may be supplemented or extended by further theoretical devices. In particular, although thermodynamics can help to describe the conditions of class struggle and the divergence between market valuation mechanisms and the actual value of resources, it cannot account for the lived experiences of the former, and offers no substantial critique of the latter. However, it does allow for the reinsertion of the economic process into much wider physical, chemical and biological processes. For if the entropy law operates at all levels, then one can understand the economic process as a continual exchange between low and high entropy, just as dissipative systems maintain coherence through the reduction of energy gradients. An energy gradient is a differential; such as that between hot and cold, or between disparate prices; whose value can be tapped through the application of work. This is a naturalised view of finance, however it must be clearly stated that such a naturalisation does not entail a valorisation of present economic conditions. Rather, the economy, like the environment, exhibits a high degree of structural and functional redundancy, such that a great number of contingent modes of organisation are possible. Lets be clear here, to say that something is natural is not to say that it is good, after all a tumour is natural. It is just to argue that it is subject to a materialist analysis, without claiming to exhaustively describe all its aspects. Moreover, we must not conflate biological and economic ecologies, but rather treat them in their specificity.

It is useful, at this point, to clarify the distinction between low and high entropy. For the purpose of elaborating an ecological economics, Georgescu-Roegen understands the economy as a process that transforms available free energy into unavailable bound energy, that is to say the exploitation of a gradient. The former may be understood both as specific concentrations of material-energetic structures, such as oil or gold, and the potentiality for value extraction offered by living labour; while the latter is exemplified by waste, pollution, highly diffuse forms of matter-energy such as heat, and those forms of dead labour that no longer afford value extraction.

The economic process is the modulation by which a certain dissipative system maintains itself by continually ‘inputting’ free energy and ‘outputting’ bound energy. This entails a local growth of efficiency, or increase in the throughput of energy, that evolves according to the maximum entropy principle (MaxEnt), where the entropy of the microstates that do not correspond to the successful application of a function or technology are maximised such that the energetic cost is minimised for a given utility.v This local reduction of entropy is ‘observer dependent’, however it also necessarily results in an increase in ‘observer independent’ entropy according to the maximum entropy production principle (MEPP).vi Effectively this means that biological, technical and economic evolution all lead inevitably towards an amplification of entropy at the environmental level. Nevertheless there is a high degree of contingency that determines the rate of throughput.

Within the field of evolutionary economics the notions of energy and information gradients become essential in understanding the dynamics of socio-economic change. In this sense, a certain abstract evolutionary matrix is common to all open systems, whether physical, chemical, biological or socio-economic.

If there is an energy gradient available, a simple dissipative structure will exploit it. Similarly, if there is an information/knowledge gradient available, a socio-economic structure will grow and develop around this continual process of reduction.vii

Ever since Friedrich Hayek and Eugene Fama, information becomes a crucial vector in understanding financial markets. For Hayek, markets are a way of collecting and aggregating available information. Fama understood efficient markets as reacting instantly to new information, thereby unproblematically reflecting all available information. While we certainly do not agree with the wider premises and conclusions of these liberal economists, it is important to understand economic systems as collective calculating devices that compute transient equilibriums.viii Balancing economic, computational and thermodynamic perspectives, markets may be defined as dissipative structures coping in an entropic/noisy environment by reducing both energetic and information gradients. This becomes particularly apparent in modern capitalist economies. The current swarm of financial actors, including human, non-human and hybrid systems, feed off a social production of knowledge and its informational friction. An evolutionary process of variation, coordination and selection, leads to differential levels of fitness and to huge asymmetries in terms of collecting and processing information, and hence to the creation of increasingly complex structures with higher rates of change. Such systems are characterised by non-linear risk situations featuring high interconnectivity and super-spreaders that amplify contagion.

In this sense, the main activity of finance is the bearing of uncertainty, but more precisely the reduction of an energetic and informatic gradient, fuelled by the ever-growing heterogeneity of the market.ix Financial actors are not only an intermediary between producers and users of information, but they also 'assume a hermeneutic function' of performative interpretation, and moreover occupy the point of overlap between an information network and a liquidity network.x Maintaining itself at that particular juncture, allows the financial intermediary to access and reduce a very steep energy/information gradient. The investment bank therefore sits at the nexus of an informal information marketplace for price-relevant information.xi

Our argument is that High Frequency Trades (HFTs) are complex socio-technical systems that thrive both through the production of noise and by the reduction of information gradients, operating at a high rate of throughput and offsetting noise/entropy to the wider financial ecology. In order to explain these claims it is necessary to briefly chart the evolution of computing within finance and the subsequent appearance of algorithmic trading. From carrier pigeons and the transatlantic telegraph cable to contemporary ICT, finance has always been a site for intensive technical innovation. This is no surprise, inasmuch as financial actors thrive by accessing and reducing information gradients and exploiting communication inefficiencies.

More recently, the shift from open-outcry face-to-face trading to automated electronic trading has represented a huge leap in efficiency and the reduction of transaction costs. However, even Milton Friedman was aware that there is an 'intrinsic paradox built into the assumption of efficient markets’, since efficiency is maintained by detection of inefficiencies, the closer to absolute efficiency the less inefficiencies can be discovered; so the market can never achieve absolute efficiency.xii There is thus a complex dialectical interplay between drives to market efficiency and inefficiency. As the market approaches efficiency, there are less opportunities for arbitrage by informed traders (who gauge the discrepancy between the current price and the fundamental value of the underlying asset), and uninformed 'noise traders' progressively dominate the market.xiii This inevitably leads to the inflation of bubbles, with the subsequent collapse to fundamental values (when it is not brought on through market manipulation) occurring in an entirely unpredictable manner.

The market thus oscillates asymptotically around the attractor of zero information friction in an incomputably random orbit. While this movement receives its impetus from the dialectical, or apparently co-constitutive relation between efficiency and inefficiency, its trajectory and effects are far from reversible, resulting in the non-dialectical destruction of whole swathes of economic actors largely at the base of steep energy gradients.xiv Witness the wave of repossessions following the sub-prime mortgage crisis, or the assymetric distribution of debt organised by the austerity regime. A point made by Evan Calder Williams following Bordiga's description of capital as 'Murderer of the Dead.'xv

Ever since the mid-’80s, there has been an incredible growth in the adoption of ICT and algorithms in the marketplace. From the computer terminals that were simply assisting human traders to the contemporary HFT software, we have seen the emergence of this new financial ecosystem, a highly complex computational matrix.xvi Algorithms are no longer tools, but they are active in analysing economic data, translating it into relevant information and producing trading orders.xvii This transition represents a new phase of real subsumption affecting all economic actors and social conditions. That is, if labour relations are reorganised around mechanics in the industrial revolution, then thermodynamics and cybernetics in the last two centuries, the current phase of real subsumption may be understood according to contemporary scientific transformations. This is often called the 'nano-bio-info-cogno revolution', and is based on distributed networks and ‘friction free’ systems (i.e. superconductors, ubiquitous computing). However, the importance of Georgescu-Roegen is his assertion that no such friction-free economy is possible, since the drive to efficiency is limited by the absolute scarcity of low entropy resources and met with a corresponding increase of exhaustion or resistance issuing from labour power.

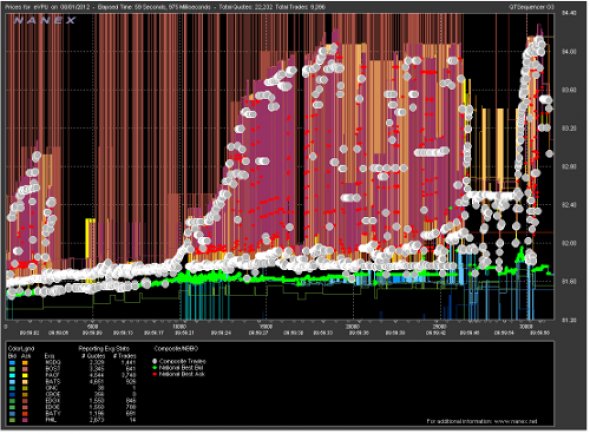

Neil Johnson et al. identify a ‘robot phase transition’ after 2006 where the sub-millisecond speed and massive quantity of robot-robot interactions exceeds the capacity of human-robot interactions. They argue that operating at such timescales is intrinsically unstable and 'characterized by frequent black swan events with ultrafast durations'.xviii While Nassim Taleb's black swan theory is contentious, the conceptual core may be subtracted from his wider project, and refers to high-impact real contingencies as opposed to the structured randomness that casinos and quantum physics display. Analogous to the well-known effect in systems engineering where small cracks in a fuselage build up to a breaking threshold, financial friction is so high that micro-fractures in the form of mini flash-crashes proliferate, threatening the whole ecology.xix Moreover, through the logic of encapsulated coding they employ, algorithmic trading software platforms are intrinsically open to abusive practices, and represent highly opaque and consequently 'unworkable interfaces'.xx

In order to address the topic of HFT rigorously, we must not conflate the material specificities that define its heterogeneity; distinctions must be made between electronic, program and algorithmic trading, where HFT is a heterogeneous subset of all three.xxi ‘The universe of computer algorithms is best understood as a complex ecology of highly specialized, highly diverse, and strongly interacting agents.’xxii Within this line of technical and financial innovations, we can see various types of trading strategies that employ an equally diverse population of market order types. Further, from an ecological perspective one can distinguish between various trading and execution algorithms, but also ‘predatory’ relationships. ‘Strategies, markets and regulations co-evolve in competitive, symbiotic or predator-prey relationships as technology and the economy change in the background.’xxiii

For example, pairs trading strategies (whose computational costs are so high they only took off after the ’80's ICT revolution) unilaterally feed on the predictable price reversals engendered by portfolio balancing, just as short-term strategies prey on their long-term counterparts to the point of extinction.xxiv Certain ‘species’ try to efficiently execute a trade, so as to achieve minimal market impact. They split large orders into smaller packs and execute them at certain time intervals. More evolved versions, like ‘volume-weighted average price’ (VWAP) algorithms, employ complex randomisation functions coupled with econometrics to optimise the size and execution times depending on overall trading volumes.xxv Moreover, new ecological niches have emerged in order to obfuscate the execution of large orders, known as 'dark pools'.xxvi There are other types who try to profit from identifying and anticipating such trades, the algorithms sometimes referred to as ‘predatory’.xxvii Perhaps the best example of such a frequency-dependent evolutionary path, one that is well-known for its compulsive non-adaptive drive, is the proliferation of low-latency algorithms that profit from the transmission speed differentials inherent in the geography of the globally integrated financial system, and the material transformations these informatic relations entail.xxviii

Image: ‘leaf insects such as the Phyllium frequently end up devouring each other'

Such strategies of camouflage, mimesis and deception are endemic in predator-prey relationships, fuelling a run-away propagation of non-adaptive mutations according to the non-equilibrium dynamics of the 'Red Queen Effect', and are modelled in evolutionary game theory as crypsis.xxix In his discussion of the pathological tendencies of technological capitalism Ray Brassier cites Roger Callois' investigation of thanatropic mimicry, pointing out that such effects are irreducible to equilibrium models of dialectical resolution, and may often terminate in non-dialectical self-destruction.xxx ‘In mimicking their own food,’ Brassier writes, ‘leaf insects such as the Phyllium frequently end up devouring each other’xxxi He continues, some pages later:

Enlightenment consummates mimetic reversibility by converting thinking into algorithmic compulsion: the inorganic miming of organic reason. Thus the artificialization of intelligence, the conversion of organic ends into technical means and vice versa, heralds the veritable realization of second nature […] in the irremediable form wherein purposeless intelligence supplants all reasonable ends.xxxii

Global finance can be seen as the staging ground for a continual redistribution of energy and information gradients; HFT is a prime example of this kind of evolutionary landscape. At a high enough level of liquidity, information friction and disparity allow for the emergence of computationally intensive systems that can effectively reduce gradients and extract rents.xxxiii While it is true that HFT accounts for a large part of market transactions, the profits are not the most significant among market participants. In the end, all of the ‘bigger’ actors tolerate low-latency trading firms because they provide much needed liquidity. Nevertheless, HFT exists because at certain volumes of trading, they enjoy a systematic advantage, which is the result of a ‘technicality’ of trading that is opaque to outsiders.xxxiv They manage to ‘survive’ by exploiting information gradients that ‘slower’ market participants are unable to access.

Nanex: On ... Aug 5, 2011, we processed 1 trillion bytes of data ... This is insane. ... It is noise, subterfuge, manipulation. ... HFT is sucking the life blood out of the markets... [A]t the core, [HFT] is pure manipulation.xxxv

Such reactions might seem dramatic, but they testify to the intense struggle going on in the computational matrix of finance every day. An ecological perspective emphasises the complex interdependencies between different financial ‘species’. Every participant is constantly processing market noise in an attempt to reduce it as much as possible to relevant information. The subsequent decisions and market orders represent more noise for the other participants, that is to say, an irreversible output of high-entropy. As long as there is enough disparity and enough heterogeneity in the market, high-frequency traders can profit from the underlying friction and produce more noise. It is precisely this persistent inefficiency of markets that informs heterodox economics.

Because of bounded rationality, financial traders can’t do everything at once – they tend to specialize. These specialized traders interact with one another as they perform basic functions, such as getting liquidity, offsetting risks and making profits. A given activity can produce profits or losses for another activity. Inefficiencies play the role of food in biological systems, providing profit-making possibilities that support the ecology.xxxvi

The interaction of heterogeneous actors with different time horizons and a variety of strategies produces the inefficiencies that make up an information gradient. Ecological economics understands the market as a food web, which can be described in terms of a gain matrix defining the interdependencies between different species. At the bottom there are the basal species – slaves, serfs, proletarians, free labour, consumers, account holders, etc. These strata are preyed on by those further up the food chain – pension funds, insurance companies, mutual funds, retail banks; and they in turn feed larger financial institutions, such as hedge funds, brokers, investment banks, propriety trading HFTs, etc. Each financial actor exploits the inefficiencies of the prey species and in the process produces new inefficiencies, further increasing the information gradient. Within this complex ecology there is a gradual stabilisation of predator-prey relationships, but unlike an actual ecosystem, the financial system has a much higher rate of change, leading to more abrupt singular events like flash-crashes evolving according to an accelerated rate of punctuated equilibria, with multiple black swans and mass extinctions.xxxvii

During the 2010 flash crash, the main US stock index (which is a replica of the market as such) lost about 900 points in a few minutes, recovering most of that loss in the subsequent 15 minutes.xxxviii To put things into perspective, it represents the wipeout of about $1 trillion in the scope of minutes.xxxix

Following the media frenzy around this event, a variety of market actors have rushed to offer explanations for such a one-sided ‘social’ decision to sell. Part of the explanation lies in a lack of regulatory circuit breakers that would have automatically suspended the free-fall following the abnormally edgy HFT reaction to the discovery of a large 'iceberg' order. From black swans and fat fingers to possible market abuses like quote stuffing (the production of noise in order to obtain a good position in the order book queue), it seems that the causal structure of such events are so complex and opaque that there will never be a definitive explanation. However, we may state with confidence that such occurrences are the kind of irreversible outputs that characterise the hyper-diversity of contemporary socio-technical ecology. Both the SEC-CFTC (2010) report, and the more recent Foresight review have shown that the impetus of the flash crash cannot be traced back to any firm engaged in HFT. Nevertheless, HFT strategies are the present culmination of a tendency towards efficiency of information throughput that inevitably ends up offsetting huge volumes of noise to the wider financial ecology. The question is not so much the good or bad intentions of HFT, but its impact on the resilience and robustness of the overall system. Though speculative trading is often driven by 'fictitious capital' it has real effects in the world, such as food price spikes that may lead to rioting or starvation. As Kliman argues, against underconsumptionist explanations such as David Harvey or Michael Hudson, the current crises are not causally reducible to fictitious finance, rather both are the effects of deeper contradictions within capitalism, indexed by the inexorable tendency of the rate of profit to fall.xl

Following the sociology of information systems and risk, we could translate this as a result of exchanging high-frequency/low impact events for low-frequency/high impact ones or an exchange between low and high entropy.xli In this sense, any increase in efficiency (throughput) of one part of the system ends up being dissipated to the rest of the system as noise.xlii If HFT has any part to play in the flash crash, it is because it can be said to represent a real push for efficiency, but one that nevertheless produces unintended consequences for the rest of the financial ecology. In as much as it diminishes the risk of trading through higher matching speeds, HFT allows buyers and sellers to reduce their transaction costs considerably. But the reduction of risk is not actually a reduction as such, and must be understood as a redistribution, or a parametrisation of the fitness landscape of the financial ecology. The crucial point here is that, given the present regulatory framework, which is supported by collusion and corruption at the national and international level (i.e. Federal Reserve, IMF, ratings agencies) a local growth in throughput efficiency enabling the accelerated tapping of low-entropy resources offsets the increased high-entropy to those that are not able to bear it. While the occupants of prime positions on the energy matrix loll around in a rich bath of liquidity, an increasing number are forced to pay for this exuberance with their jobs, their homes, and ultimately their lives. Ray Kurzweil's overzealous enthusiasm for the coming 'singularity', when human 'intelligence' is eclipsed by machines, appears wilfully myopic when we witness the effects of the 'robot phase transition'. Algorithmic hordes of parasitic vampire squids and zombie capitalists compulsively gorge on blood and brains, their exhausted victims lie all around, twitching to the non-periodic outbursts of transient code – the singularity turns out to be just another accelerating extension of exploitation.

Phenomena such as flash crashes are the inevitable outputs of a financial ecology that tends towards the non-linear emergence of noise saturation peaks. At such critical points of friction, something is bound to break. This does not simply apply to market crashes.xliii The present financial ecology maintains an unsustainable rate of throughput and a thanatropic mode of crypsis in the proliferation of strategies for digital subterfuge. In order to address the critical situation of contemporary finance, several liberal beliefs must be overcome: trust in the efficacy of competitive market mechanisms for computing equilibriums, such as the valuation of natural resources and labour; confidence in the capacity of finance to self-regulate, or to be merely a question of discovering the regulatory mechanisms for stabilisation, as it is for Michael Hudson etc.; and faith in the doctrine of sustainable development, which denies the fourth law of thermodynamics. Though finance tends towards efficiency and equity it can never achieve these states since it feeds off the noise created by information asymmetries and structural inequality, and aggressively maintains these disparities in order to extract value from the resulting ecological niches. The demand for transparency is not enough. We should not be placated by a little noise reduction. Friction must be turned around and fed back into the central mechanisms of the system, rather than being dissipated into the margins. As Reza Negarastani argues, we must find 'alternative ways of binding exteriority... remobilized forms of non-dialectical negativity'.xliv

Inigo Wilkins <inigowilkins AT yahoo.com> is a PhD student at Goldsmith’s Centre for Culture Studies. His thesis title is ‘Irreversible Noise’. He is also a research fellow working with Mute magazine and the Post Media Lab at Lüneburg University on the question of the subsumption of sociality

Bogdan Dragos <bogodin AT yahoo.com> is a PhD candidate at the Centre for Cultural Studies, Goldsmiths. His research interests comprise Philosophy of Technology, STS, Heterodox Economics and Sociology of Financial Markets. Currently, he is preparing a thesis on co-evolution of technology and financial markets as complex socio-technical systems

Footnotes

i Heterodox economics comprises thermo-economics, bio-economics, evolutionary economics and ecological economics; Nicholas Georgescu-Roegen, The Entropy Law and the Economic Process, Cambridge, Massachusetts: Harvard University Press, 1971, p.4.

ii Midnight Notes Collective (George Caffentzis, Monty Neill, Hans Widmer, John Willshire), ‘The Work/Energy Crisis and the Apocalypse’, Midnight Notes, Vol. II, #1, 1980.

iii Nicholas Georgescu-Roegen, ‘Energy Analysis and Economic Valuation’, Southern Economic Journal,1979, 45, 4: p.1033.

iv Paul Burkett, Marxism and Ecological Economics: Toward a Red and Green Political Economy, Brill, 2006. p.145.

v The maximum entropy principle is the prime doctrine of Bayesian probability theory, which states that 'the probability distribution which best represents the current state of knowledge is the one with largest information-theoretical entropy.' http://en.wikipedia.org/wiki/Principle_of_maximum_.... In the evolutionary economics use of the term, it is thought as one with the 'maximum power principle', as formulated by Jaynes and Lotka, which describes the physics of evolutionary systems. Odum defines it thus: 'During self-organization, system designs develop and prevail that maximize power intake, energy transformation, and those uses that reinforce production and efficiency.' H.T.Odum 'Self-Organization and Maximum Empower', in Maximum Power: The Ideas and Applications of H.T.Odum, C.A.S.Hall (ed.), Colorado: Colorado University Press, 1995, p.311.

vi Carsten Herrmann-Pillath, Foundations of Evolutionary Economics, Edward Elgar, forthcoming. Available at SSRN: http://ssrn.com/abstract=1781469

viiStanley Metcalfe and John Foster, Evolution and Economic Complexity, Edward Elgar Publishing, 2007, and Economic Emergence: an Evolutionary Economic Perspective, Max Planck Institute of Economics Jena, Evolutionary Economics Group, # 1112, 2011. This statement should not be taken dogmatically however, since as Ostrum demonstrates there are diverse ways in which collective self-organisation can govern common-pool resource problems that effectively reduce or stop gradients from being tapped at a rate that ends in a 'tragedy of the commons'. Elinor Ostrum, Governing the Commons: The Evolution of Institutions of Collective Action, Cambridge University Press, 2008.

viii Michel Callon and Fabian Muniesa,’Les marchés économiques comme dispositifs collectifs de calcul’, Réseaux 21(122), 2003, pp.189-233.

ix Alan Morrison and William Wilhelm Jr, Investment Banking: Institutions, Politics, and Law, Oxford: Oxford University Press, 2nd Revised edition edition, 2008, p.4.

x Laurence Gialdini and Marc Lenglet, Financial Intermediaries in an Era of Disintermediation: European Brokerage Firms in a MiFID Context, 2010, p.23. Available at SSRN: http://ssrn.com/abstract=1616022 or http://dx.doi.org/10.2139/ssrn.1616022

xi Alan Morrison et. al., op. cit., p. 72.

xii J. Doyne Farmer and Spyros Skouras, ‘An Ecological Perspective on the Future of Computer Trading’, The Future of Computer Trading in Financial Markets, UK Foresight Driver Review – DR6, 2011, p.12.

xiii Andrei Shleifer and Lawrence Summers, ‘The Noise Trader Approach to Finance’, Journal of Economic Perspectives, Volume 4, Number 2, 1990, pp.19-33.

xiv Despite the dialectic of efficiency and inefficiency there is a general trend toward efficiency indexed by the fall in bid-ask spreads. James Angel,Lawrence Harris, and Chster S. Spatt, ‘Equity Trading in the 21st Century’, Marshall School of Business Working Paper No. FBE 09-10, 2010. Available at SSRN: http://ssrn.com/abstract=1584026 or http://dx.doi.org/10.2139/ssrn.1584026

xv Evan Calder Williams, Combined and Uneven Apocalypse, Zero Books, 2011, p.188; Amadeo Bordiga argues that capital functions not just through the 'creative destruction' that Shumpeter identifies, but also through a 'destructive destruction' necessitated by the build-up of dead labour. Amadeo Bordiga, 'Murder of the Dead', Battaglia Comunista, No. 24 1951; http://marxists.org/archive/bordiga/works/1951/murder.htm

xvi Marc Lenglet, ‘Conflicting Codes and Codings: How Algorithmic Trading is Reshaping Financial Regulation’, Theory, Culture & Society November 2011, 28: 44-66, p.2; Fabian Muniesa, Des marchés comme algorithmes: sociologie de la cotation électronique à la Bourse de Paris, Thèse de doctorat (PhD Thesis), Ecole des Mines de Paris, 2003.

xvii Ibid., p.3

xviii Neil Johnson, Guannan Zhao, Eric Hunsader, Jing Meng, Amith Ravindar, Spencer Carran and Brian Tivnan, ‘Financial black swans driven by ultrafast machine ecology’, arXiv, 7 February 2012.

xix Didier Sornette, Why Stock Markets Crash, Princeton University Press, 2003.

xx Michel Callon and Fabian Muniesa,’Les marchés économiques comme dispositifs collectifs de calcul’, Réseaux 21(122), 2003 or ‘Economic Markets as Calculative Collective Devices’, Organization Studies, 26(8), 2005, p.1236; Alexander R. Galloway, The Interface Effect, Polity, 2012. pp. 25-54.

xxi Aldridge offers a broad description of different ‘algorithmic’ classes into electronic, algorithmic, systematic, high-frequency, low-latency, market making, etc. Irene Aldridge, The Evolution of Algorithmic Classes, 2010, p. 4.

xxii J. Doyne Farmer, Spyros Skouras, An ecological perspective on the future of computer trading, p.6

xxiiiIbid.

xxiv Ibid., p.16

xxv Donald MacKenzie, Daniel Beunza, Yuval Millo, Juan Pablo Pardo-Guerra, Drilling Through the Allegheny Mountains: Liquidity, Materiality and High-Frequency Trading ,2012, p.9, http://www.sps.ed.ac.uk/staff/sociology/mackenzie_...

xxvi James Angel, Lawrence Harris, Chester S. Spatt, Equity Trading in the 21st Century, Marshall School of Business Working Paper No. FBE 09-10, 2010, http://ssrn.com/abstract=1584026 or http://dx.doi.org/10.2139/ssrn.1584026

xxvii Themis Trading 2008, 2009

xxviii Donald MacKenzie, op. cit.

xxix U. Dieckmanna, P. Marrow, R. Law, 'Evolutionary cycling in predator-prey interactions: population dynamics and the red queen' Journal of Theoretical Biology, Volume 176, Issue 1, 7 September 1995, pp.91–102; G. D. Ruxton, T.N. Sherratt & M.P. Speed, 'Avoiding Attack: The Evolutionary Ecology of Crypsis, Warning Signals and Mimicry'. Oxford University Press, 2004.

xxx Ray Brassier Nihil Unbound: Enlightenment and Exctiction. Palgrave Macmillan 2007. p.43

xxxi Ibid.

xxxii Ibid., p.47

xxxiii Foresight: The Future of Computer Trading in Financial Markets (2012) Final Project Report. The Government Office for Science, London

xxxiv Donald MacKenzie, op. cit., p.20

xxxvIbid., p.18

xxxvi J. Doyne Farmer, op. cit., p.6.

xxxvii Stephen Jay Gould & Niles Eldredge, ‘Punctuated equilibria: the tempo and mode of evolution reconsidered’, Paleobiology 3 (2), 115-151, 1977, p.145;. Doyne Farmer, op. cit., p.14.

xxxviii On May 6th 2010, the US stock market experienced one of the most severe price drops in its history; the Dow Jones Industrial Average (DJIA) index dropped almost 9% from the beginning of the day - the second largest point swing, 1,010.14 points, and the biggest one-day point decline, 998.5 points, on an intra-day basis in the history of the DJIA index. Anton Golub, John Keane, Mini Flash Crashes, 2011, p1 and Anton Golub, John Keane, Ser-Huang Poon, High Frequency Trading and Mini Flash Crashes, HFT Review, 2012, http://arxiv.org/pdf/1211.6667v1.pdf

xxxix For a time, equity prices of some of the world’s biggest companies were in freefall. They appeared to be in a race to zero. Peak to trough, Accenture shares fell by over 99%, from $40 to $0.01. At precisely the same time, shares in Sotheby’s rose three thousand-fold, from $34 to $99,999.99. Andrew Haldane, The race to zero, International Economic Association Sixteenth World Congress, Beijing, China, 2011, p1, http://www.bankofengland.co.uk/publications/Docume...

xliNiklas Luhmann, Risk: A Sociological Theory, AldineTransaction, 2005; Jannis Kallinikos, Governing Through Technology: Information Artefacts and Social Practice. Palgrave Macmillan , Basingstoke, UK, 2011. Claudio Ciborra and O. Hanseth, (eds.) Risk, complexity and ICT, Cheltenham: Edward Elgar Publishing, 2007. Elena Esposito, The Future of Futures: The Time of Money in Financing and Society, Cheltenham: Edward Elgar Publishing, 2011; Nicholas Georgescu-Roegen, The Entropy Law and the Economic Process, Harvard University Press: Cambridge, Massachusetts, 1971

xlii Carsten Herrmann-Pillath, Foundations of Evolutionary Economics, Edward Elgar, forthcoming. Available at SSRN: http://ssrn.com/abstract=1781469

xliii The year 2012 has seen the NASDAQ debacle on the Facebook IPO, the problems with BATS’s own IPO and the recent collapse of Knight Capital Group. All of them have been attributed to software problems.

xlivReza Negarastani 'Drafting the Inhuman: Conjectures on Capitalism and Organic Necrocracy' in L.R. Bryant, N. Srnicek, G. Harman (eds.), The Speculative Turn – Continental Materialism and Realism, Melbourne: re.press, 2011, p.199.

Mute Books Orders

For Mute Books distribution contact Anagram Books

contact@anagrambooks.com

For online purchases visit anagrambooks.com