Intelligent Content (Agent Technology and the World Wide Web)

Agent technology and the World Wide Web

At last! Finally! In the Summer of 1996 agent technology was widely hailed in the media as a long awaited remedy to the "Information Overload" of the Internet. Critics such as Jaron Lanier have pointed out some of the social implications of agent technology, warning us that users will be prone to limit their spheres of interest in order to match the reduced AI capabilities of their agents. Our interests will be reduced to lowest common denominators.

The possible economic implications of agent technology have, however, remained largely undebated. In light of this hole in the debate I would like to discusss how the World Wide Web model as a marketplace of ideas, goods and services is undergoing change in part due to the rise of agent technology and, more importantly, how these changes fit into a larger picture of longer term socio-economic shifts.

Over the next few months, we will see the World Wide Web evolve as rapidly as it has in the past. It will become a more dynamic, yet more standardised and uniform place. Web pages will include more programming, be database driven and involve less craftsmanship. We will be faced with separated information titbits rather than individually crafted hypertext information landscapes capable of delivering coherent strings of meaning. More importantly, however, we will find that more of our information will come from fewer sources. This is surprising. One would expect agent technology to provide us with content from unknown sites we would otherwise not have the time to discover. But the development of agents technology is not occurring in a vacuum. It is taking place in the context of a steady trend of World Wide Web commercialisation. The Web is spawning a distinct centre and periphery, content is becoming categorised and Web economics are fusing with "Real Life" economics.

The World as We Knew It

The world as we knew it ended in the Summer of 1996. Until then, the production of Web sites used an approach that was strongly reminiscent of the handicrafts. The browser frame was the window onto the world. There was little necessity to look at the workings of the server software, at database technology, to examine the right, left or the behind of the browser window. The simplicity and intrinsic beauty of the Web production process allowed for the formation of whole "Internet Suburbias" of private home pages. A little, proud home cottage industry flourished.

One of the main reasons for the success of the World Wide Web model developed at the CERN laboratories in 1989 lay in the utilisation of the "pull" approach to information gathering. Since the viewer was not force-fed information, but could rather "browse" the hypertext landscape at will, it was possible to allow for virtually unrestricted publishing activity. Architectural and geographic metaphors such as "Information Landscapes" accurately described the World Wide Web.

The Coming of the Agent

The coming of agent technology was not a sudden occurrence. It can be seen as part of a longer-term technological trend. Here, I define the term "Agents" loosely as any software program that supplies the user with an automated, individual information selection mechanism. Basically, agent technology as I understand it is quite trivial; it ranges from the first simple UNIX email filtering systems to the database agent systems available on the World Wide Web today. World Wide Web search engines and database-driven web sites marked the beginning of the trend, the integration of AI "learning" capabilities and the automation of procedures can be viewed as steps in improvement. "Webcasting" schemes such as those of Netscape and the New York Times are also applications of the agents approach.

The skills of commercial agents today are still very primitive. Almost all agent technology available to the non-hacker consumer is embedded within web sites, and can currently only process information specifically prepared for them in databases. Even at this stage, however, agent technology is making web sites increasingly complex to create. Soon, however, agents will free themselves from specific sites and migrate into our user interface. The first step is being made by IBM's software "Web Browser Intelligence" downloadable as freeware from the IBM site. At this stage, agents will become very powerful. They will act as bots, browsing the Web instead of us.

'Good news! The lawless frontier of cyberspace has no restrictions against slavery so you can order up your own personal servants and make them hunt down information that fits your needs' (Online User, November 1996, p.11). What is important here is that instead of actively seeking out information on the World Wide Web landscape, we will increasingly find that information will be brought to us. The point of consumption of information is moving from the remote server to the user's own computer. In Business Week of September 9, 1996, Marc Andreessen remarked that: "The metaphor for the Web is going to shift from pages to channels."

Agents and the Market

Over and over again we hear the argument that in order for the World Wide Web to become a legitimate commercial entity, it needs to change. The geography of the Web is responding to this need by forming a distinct periphery and centre. The centre is composed of sites with easily recognisable brand names. In a Hotwired editorial "Market Forces," David Kline pointed out that brands have an even greater power in an electronic market place than in "Real Life." Kline quotes the consultant Carol Holding as saying: "There are only 30,000 items in the average supermarket compared to tens of millions of pages on the Web. You've got to provide some mnemonic stimulus Ð you've got to have a pretty powerful brand identity with consumers Ð or you won't get noticed."

The extent to which non-commercial, private sites subscribe to the branding metaphor as well by appearing with so-called "logos' is fascinating. In his project "logo.gif" Frankfurt-based Web designer Markus Weisbeck is collecting images on the World Wide Web called "logo.gif" using a crawler. Over 200.000 sites apparently sport images with this standardised name.

Creating a successful Internet brand, however, is expensive, as the experience of Hotwired shows. As the most successful Web site it is still losing money. (In the report Wired Ventures Inc. filed to the Securities and Exchange Commission on the 21st of October 1996, costs of approximately $ 5.5 Million were attributed to its online engagement, offset by online revenues of only $ 2.3 Million). Companies hosting sites are also realising that Web content needs to be advertised not only on the Internet, but also in "Real Life," in print publications and TV ads. Web sites are now an established part of the marketing mix of most US corporations.

In the current stage of agent development, sites are increasingly embedding their content within expensive agent technology-databases. When agents are freed from specific sites in the next stage of development, content will need to be created specifically for easy agent consumption.

Personalised Personal Agent Interfaces: The Cheap Way to Go.

So, what could an agent interface look like and how does one deal with the problem of highly dynamic content?

William Mitchell has written a popular but mainly disappointing book ("City of Bits," MIT Press, Cambridge, Massachusetts, 1996, p. 14) about the virtual architectures of the future in which he offers us a simple solution to this design challenge: "While the Net disembodies human subjects, it can artificially embody these software go-betweens. It is a fairly straightforward matter of graphic interface design to represent an agent as an animated cartoon figure that appears at appropriate moments (like a well-trained waiter) to ask for instructions, reports back with a smile when it has successfully completed a mission, and appears with a frown when it has bad news. If its "emotions" seem appropriate, you will probably like it better or trust it more. And if cartoon characters do not appeal, you might almost as easily have digital movies of actors playing cute receptionists, slick stockbrokers, dignified butlers, responsive librarians, cunning secret agents, or whatever personifications tickle your fancy."

What a wonderful world! This quote shows how naively decision makers in the New Media tackle the design problem. How can it be even remotely desirable to install trust in certain bits of information through the appeal of the interface and not the nature of the information itself?

The nature of the agent technology seems to demand a personified interface, or so a great many people seem to be thinking. The dream of avatars and cyborgs go way back into the mechanical age. The writings of E.T.A. Hoffmann, Mary Shelley and Edgar Allan Poe describe these fantasies. People such as Johann Nepomunk lived them when he presented the Doppleganger-Automaton "Chessplaying Turk" to an American audience in the first decade of the 19th century. In fact, the human interface model proposed by William Mitchell mirrors the classic master and slave relationship that can be found in all these visions. This is precisely the element of hierarchy that is supposedly being challenged by the open, uncontrolled structure of the Internet. However, the process of personification pulls with it a number of additional problems, the greatest being that the potential range and depth of our information sources is flattened. In fact, it distorts the clear distinction between information landscape and interface. This is also a shame for the designer because he or she looses control over the ability to embed multimedial information within unique landscapes that illustrate how different elements correspond to one another, thus conveying greater structures of meaning.

In his book about interface design "About Face" (The Essentials of User Interface Design, IDG Books Worldwide, Foster City, CA, 1995, p. 53, 54) the software designer Alan Cooper describes the danger of using metaphors in general in software design. They are initially easy to comprehend, but then the functionality of the program is reduced to the simple stupidity of the metaphor. Instead, Cooper argues for the use of an idiomatic approach, in which the user is given a simple, yet powerful new language of abstract symbols and tools. One of Cooper's credos: "All idioms need to be learned. Good idioms need to be learned only once." He adds: "Searching for that magic metaphor is one of the biggest mistakes you can make in user interface design. Searching for an elusive guiding metaphor is like searching for the correct steam engine to power your aeroplane, or searching for a good dinosaur on which to ride to work. Basing a user interface design on a metaphor is not only unhelpful, it can often be quite harmful. The idea that good user interface design relies on metaphors is one of the most insidious of the many myths that permeate the software community."

The human face shares all of the problems of conventional software design metaphors share. It is, in fact, one of the worst metaphors one could use. I also believe however, that its limits will not make it a viable alternative to idiomatic design approaches. However, designers should be aware of the attraction this metaphor has especially today, in the world of commercial bots and agents.

To conclude, an example from the film "Star Wars" comes to my mind. The robots R2D2 and C3P0 had completely different modes of passing on information. C3P0 was a full humanoid, and acted as a storytelling human. R2D2, however, much less human in form, had the ability to project 3D images of events and narratives he had witnessed. I remember that R2D2 used this method to project a very emotional speech of Princess Lea Ð what she said I must confess I have no idea. In this mode, R2D2 was nothing but a future browser, a navigation interface for information landscapes. Let's think of R2D2 when designing our interfaces in the future.

Political Agents

Alongside the creation of personified agents a categorisation scheme for content will further alter the geography of the World Wide Web and our user interaction partners. Due to political pressure against perceived pornography on the Internet, the World Wide Web Consortium has developed a "Platform for Internet Content Selection." 'PICS' allows a site to be associated with a content label. Browser software can read these labels and block certain sites. Compuserve and Microsoft have already embraced PICS. It seems as if PICS could be one of the few successful activities of the W3 Consortium these days.

The important thing about this categorisation initiative is that it can be implemented beyond perceived pornography to label very diverse types of content. Educational sites, religious sites, trusted sites and politically incorrect sites can be thus identified. Sites that own rights to copyrighted digitised data can be located. The problem with PICS, of course, is who does the labelling? Perhaps international bodies such as the World Bank and the World Wildlife Association can find a new justification for their existence by acting as labelling bodies. In the USA, the Recreational Software Advisory Council has already proposed a "RSACi" rating system for violence, nudity and language on the Internet that has been adopted by Compuserve.

In combination with agent technology, a content ratings system becomes even more powerful. Imagine developing agent software in combination with a labelling system. Large media corporations could sell special branded agents that responded to a very detailed labelling system, covering thousands of different types of content and services. They could then "grant" a label to certain sites that pay them an administrative fee. In exchange, the site would be picked up by the agent radar. Imagine the following scenario: Time-Warner distributes its very advanced and funky- looking branded agent free of charge to its customers. 2.000 of these consumers register themselves in the Time-Warner database as being dentists. The media conglomerate can now offer a customised "label" to sites of dental equipment manufacturers. These manufacturers will be willing to pay for the label since they know that the agents of at least 2.000 information-hungry dentists automatically react to it.

Border Crises and the Perceived Information Overload

The information overload that we are experiencing on the World Wide Web is taking place in our heads. There is a much larger amount of information created on a daily basis in print than on the Internet. Yet, we seem to be feeling that there is an especially large amount of data on the Net that we are missing.

The development that seems to be making the apparent flood of information on the Internet more manageable, a technological, social and economic development, is at the same time threatening to dissolve the separation between interface and information landscape and thus the incredible diversity of meaning that was linked to this separation. The separation itself is one of the main characteristics of the World Wide Web, nested in the concept of hypertext and of servers and thin clients. Breaking it up may be a step back. Let us avoid accelerating this development by carefully selecting the forms of agent technology we employ.

Niko Waesche <waesche AT easynet.co.uk>

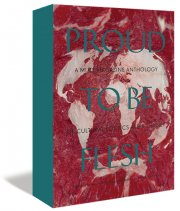

Mute Books Orders

For Mute Books distribution contact Anagram Books

contact@anagrambooks.com

For online purchases visit anagrambooks.com