The Battle for Broadband

A widespread broadband infrastructure is slowly limping into existence, but the issues it poses for net-cultural politics are developing apace. Felix Stalder considers the different complexions of our broadband future

Remember 1990? Technology was supposed to revolutionise everything in one fell swoop, finally eradicating all those pesky problems that bedevil modern life. The fulfilment of all our needs and wants was just around the corner. No one sang the praises of this revolution more than George Gilder, the messiah of bandwidth, who dreamed of the ‘telecosm’ with its ‘crystal cathedrals of fibre.’ Unfortunately, that didn’t exactly pan out. Life is still not hassle-free and these days broadband makes headlines through spectacular bankruptcies and lousy service, not as the road to salvation.

As usual, the future doesn’t descend on us fully formed, rather it arrives limping. It’s a messy mix of incompatible standards, buggy technologies, and a nagging uncertainty over whether the real thing is still coming or whether it’s already over. But one overpriced cable connection, one hard-to-install DSL or ISDN link, one experimental wireless network at a time, broadband is becoming an unequally distributed reality; and the contours of this reality are emerging.

THE GOODThe internet’s architecture used to be based on the model of client and server. The server runs on a powerful machine that is continuously connected to the network. It stores the data and services that are requested by the client. The client is relatively weak and sporadically connected to the network, a browser displaying the web through a dial-up connection. Broadband supports a new network architecture: peer-to-peer. Contrary to the old dial-up, broadband connections are always on, even at home. Add to this the power of an average PC which has increased to a level that it could just as well double as a simple server and still do all the work of a normal PC. Both clients and servers are now running on powerful, continuously connected machines.

File sharing was the first application to define the culture of broadband. Imagine Napster on dial-up. Impossible. However, file sharing is not the only peer-to-peer application. Any aspect of a computer, not just its content, can be shared or pooled amongst peers in a broadband network. The sharing of processing power, CPU cycles, is the next obvious example. The clustering of PCs can bring supercomputing power to people and problems outside elite research centres. When the search for extraterrestrial intelligence was abandoned by NASA, seti@home revived it without any of NASA’s high-end machines. Rather, it distributed the number-crunching across thousands of normal PCs, each doing a small segment of the job in parallel. Internet users volunteered spare capacities of their machines for the thrill of peering into deep space and having a cool screen saver. More applications of this kind are popping up: brute-force attacks on encryption keys – until recently the domain of super-expensive super computers – are now feasible in a network of clustered PCs. All that is needed is a program for coordinating the distribution of tasks and a pool of volunteers, both not very hard to find. Other peer-to-peer architectures such as Freenet, an anonymous publishing network, share storage space across the network, making it in effect impossible to physically locate files because they move freely from peer to peer across the network.

Broadband’s propensity for peer-to-peer activity is good news for anyone interested in distributed applications. Does this mean a return of the internet utopias?

THE BADOf course, not everyone sees broadband as the enabler of decentralised, bottom-up computing. On the contrary, most of the companies that lay down the infrastructure have a very different, decidedly top-down vision: Interactive TV. For them, broadband means pumping out massive video files, a kind of rerun of the video-on-demand failures of the 1980s. Across all technological changes, this persistent vision has one constant component: pay-per-view.

The slump in advertising is not (only) what puts pressure on companies to charge users for access to their content – it’s the broadband environment itself. Bandwidth, despite all excess capacities, is expensive and pumping out video streams to the masses eats up a lot of it. Don’t expect any provider without deep pockets to do that for a long time. Contrary to traditional broadcast, internet streaming scales poorly. Each new user costs extra, because each user draws an individual feed. The more users, the higher the bandwidth costs. Giving away multimedia content is prohibitively expensive. Moreover, as the media files become richer, the production costs rise. Shooting a great video tends to be more expensive than writing a good text.

Both of these factors are driving the slow emergence of the pay-per-view internet, despite user reluctance. RealNetworks, for example, claims to have attracted about 500,000 people for its pay-per-use service. For $10 a month, subscribers get access to rich media content from ABCNews.com, CNN.com, and other majors. The ‘Passport’ platform – a one-stop digital identity service managed by our friends at Microsoft – is all about making the pay-per-use vision seamless and ‘user friendly’. Pass me the popcorn, please.

THE UGLYAs the content companies begin to implement pay-per-use services, they want to know a lot more about their users: where are they going, how long are they staying there, are they paying for content, or engaging in ‘piracy’? Thanks to mergers and alliances, content providers and ISPs have become closely aligned, if not the same thing altogether. AOL Time Warner is, perhaps, the most extreme case, but it is representative for this general trend of convergence. This comes in handy for monitoring the users’ online behaviour for billing purposes.

It also helps to construct ‘walled gardens’, that is, deliberately divide the network into favoured and disadvantaged zones. One way of building such walls is to make access to services offered by the same conglomerate or its corporate partners faster than to those offered by competitors. This can be done with the help of a new generation of ‘intelligent’ routers that enables the network owners to deliver some data packets faster than others. For instance, Time magazine might load faster than Newsweek for AOL customers in the future. While this is not outright censorship, it will certainly affect browsing patterns, particularly since the manipulation is virtually invisible to the end user. Whether or not providers are allowed to manipulate access in such ways depends a lot on regulation. Cable companies, for example, tend to be under little or no obligation to treat all traffic equally, whereas telecom companies have traditionally been bound by laws to act as ‘common carriers’ that must provide the same quality of service to everyone.

Another potentially ugly side of broadband culture is paranoia. With home computers permanently connected to the network, a whole new class of internet users can become targets of malicious hackers. Most users lack the skills to secure their own machine. They are especially at risk of their machines being compromised and, for example, turned into launching pads for more serious attacks. Here’s where the paranoia sets in. As more users feel threatened by something they essentially do not understand, popular support could increase for new law enforcement measures. For the majority of users, it will be easier to support harsher penalties than to maintain complex firewalls. The overhyped threat of hackers can easily be turned into a more general attack on civil liberties online. Together, the push towards pay-per-use and an escalating fight against ‘hackers’ and ‘pirates’ might squeeze privacy out of the emerging culture altogether.

HOW GOOD, HOW BAD AND HOW UGLY?The contours sketched here don’t add up to a coherent picture. The culture of broadband is still emerging. To some degree, distributed peer-to-peer services and pay-per-use services are in conflict. Even after being acquired by Bertelsman, Napster is still off the wires because the underlying conflict between freedom and control is hard to resolve. Technologies and their applications are still searching for a stable configuration. However, concerted action will be necessary to support the good, avoid the bad and combat the ugly.

Felix Stalder <felix AT openflows.org> is a researcher and writer living in Toronto. He is a director of Openflows, a moderator of the nettime mailing list and a human being. Openflows [http://felix.openflows.org]

seti AT home [http://setiathome.ssl.berkeley.edu/]Freenet [http://freenetproject.org/cgi-bin/twiki/view/Main/WebHome]

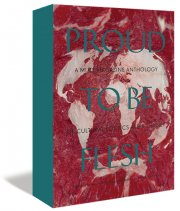

Mute Books Orders

For Mute Books distribution contact Anagram Books

contact@anagrambooks.com

For online purchases visit anagrambooks.com